As SEO professionals, we know a lot about Google. Algorithm updates are usually based on published patents. The fundamental purpose of updates is to eliminate questionable SEO practices.

By questionable practices, we mean any practice that attempts to exploit flaws in Google's algorithm to achieve better rankings in search engines. Google penalizes websites that do this, as the content provided to users on their search results pages is generally of poor quality, which means that the search engine results also suffer.

Anyone who has been playing the organic SEO game for several years is well aware of the main Black hat tactics that Google penalizes (we will see some concrete examples further down in the article).

🚀 Quick read: 3 Google patents to know to avoid an SEO penalty

- Patent of October 8, 2013, regarding "content spinning": automatic rewriting of identical pages to avoid duplicate content.

- Patent of December 13, 2011, regarding "keyword stuffing": keyword stuffing to position a site on a single word.

- Patent of March 5, 2013, regarding "cloaking": content camouflage to deceive the algorithm.

❓ Why is the way Google identifies Black Hat tactics important?

Because you don't want to accidentally make SEO mistakes that result in Google penalizing you! They will think you are trying to take advantage of the system.

In fact, you simply made some costly SEO mistakes because you didn't know. To better understand how Google's algorithm identifies bad SEO practices (and thus better understand how to avoid making SEO mistakes), you need to review Google's patents on some of the most common black hat tactics.

💫 Content spinning

The patent in question: "Identifying gibberish content in resources" (patent October 8, 2013)[1]

A website will rewrite the same publication hundreds of times to increase its number of links and traffic, while avoiding being considered duplicate content. Some sites even manage to generate revenue from this type of content through advertising links.

However, since content rewriting is a rather tedious task, many sites turn to automatic writing software capable of automatically replacing names and verbs. This usually results in the creation of very poor quality content or, in other words, gibberish.

The patent explains how Google detects this type of content by identifying incomprehensible or incorrect sentences contained in a web page. The system used by Google is based on various factors to assign a contextual score to the page: this is the "gibberish score".

Google uses a language model that can recognize when a sequence of words is artificial. Indeed, it identifies and analyzes the different n-grams on a page and compares them to other n-gram groupings on other websites. An n-gram is a contiguous sequence of elements (here, words).

From there, Google generates a language model score and a "query stuffing" score. This is the frequency of repetition of certain terms in the content. These scores are then combined to calculate the gibberish score. This score is then analyzed to determine if the position of the content in the results page should be modified.

🔑 Keyword Stuffing

The patent in question: "Detecting spam documents in a phrase-based information retrieval system" (December 13, 2011)[2]

At one time, many pages contained little or no useful information, as they strung together keywords without regard for the meaning of sentences. The update of its algorithm allowed Google to put an end to this strategy.

The patent

The way Google indexes pages based on complete phrases is extremely complex. Addressing this patent (which is not the only one on this subject) is a first step towards understanding the impact of keywords on indexing.

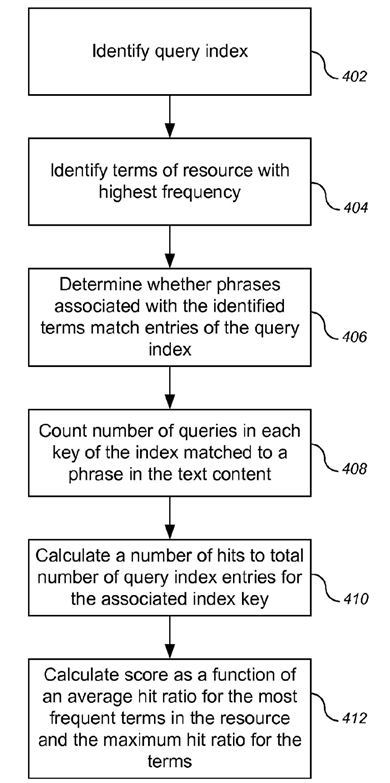

Google's system for understanding phrases can be broken down into three steps:

- The system collects the expressions used as well as statistics related to their frequency and co-occurrence.

- It then classifies them as good or bad based on the frequency statistics it has collected.

- Finally, using the predictive measure that the system has established from the statistics related to the co-occurrence of words, it refines the content of the list of expressions considered good.

The technology used by Google to accomplish these steps can cause headaches! That's why we'll get straight to the point.

How does this system allow Google to identify keyword stuffing cases?

In addition to being able to determine how many keywords are used in a given document (obviously, a document with a keyword density of 50% is keyword stuffing), Google is also able to measure the number of expressions related to a keyword (these are LSI keywords).

A normal document usually has between 8 and 20 related phrases, according to Google, compared to 100 or even up to 1,000 for a document using spam methods.

By comparing the statistics of documents that use the same key queries and related expressions, Google can determine if a document uses a larger number of keywords and related expressions than average.

🕵️♂️ Cloaking

The patent in question: "Systems and methods for detecting hidden text and hidden links" (March 5, 2013)[3]

This allows a website to be referenced as something it is not. Imagine a disguise that allows a site to sneak among search results. It will only be discovered if a user clicks on it and notices a difference.

There are several different ways to cloak a website. You can:

- place text behind an image or video;

- set your font size to 0;

- hide links by inserting them into a single character (a hyphen between two words, for example);

- use CSS to position your text off-screen...

These concealment tactics allow artificially increasing the ranking of a page. Thus, it is possible to place a list of unrelated keywords at the bottom of the page in white on a white background.

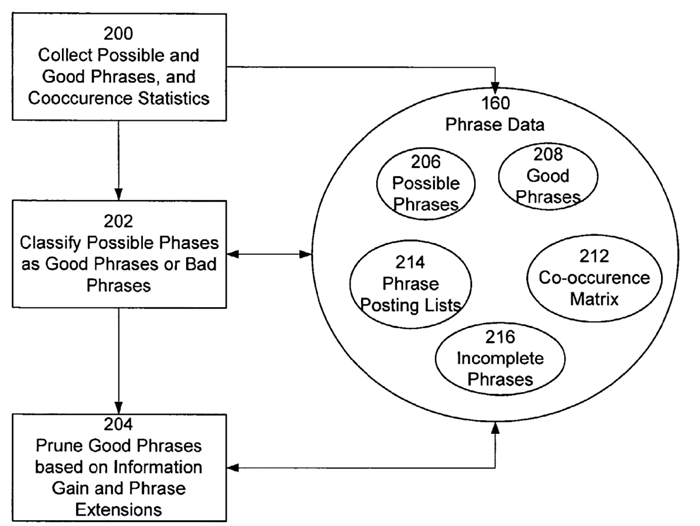

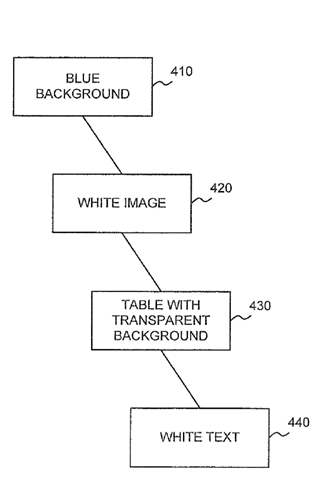

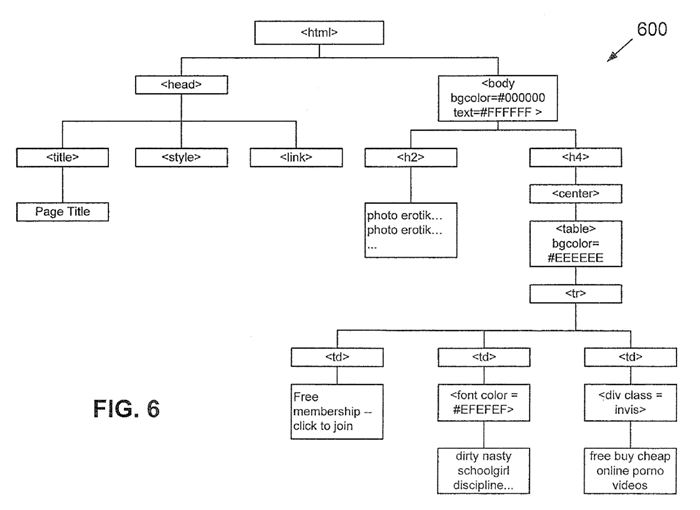

In its patent, Google explains that its system can discover such deceptions by inspecting the Document Object Model (DOM).

The DOM of a page allows Google to collect information about the different elements of the page. These include: text size, text color, background color, text position, layer order, and text visibility.

👀 Examples of SEO penalties incurred related to Google patents

The mistakes described above, whether intentional or accidental, expose you to severe penalties.

Google does not take into account the size or reputation of the website and penalizes all users who break the rules. They have even penalized themselves!

Here are some examples of penalties administered to well-known websites.

Rap Genius

The American site that lists rap song lyrics asked bloggers to insert links pointing to their site. In exchange, they promised to tweet the bloggers' posts.

This constitutes a link farm, and Google quickly penalized the website. The site was removed from the first page of results for all key phrases - including its own name! The penalty lasted ten days.

BMW

BMW made a big mistake by deciding to use cloaking to improve its SEO. This happened in 2006, and even at that time, Google managed to detect the offense. Recognized brands like BMW are not exempt from penalties. Their website was deindexed for three days. For a brand of this size, this is a huge penalty that damaged the company's image.

JCPenney

Link purchases by JC Penney were discovered by a New York Times journalist. The journalist noticed that each of the pages was extremely well positioned. Most of their content was removed from the first page. This penalty lasted for 90 days. Their traffic dropped by over 90%. JC Penney quickly fired the company in charge of its SEO and cleaned up its website.

Google Japan

It's not a joke. Google did penalize itself. It turned out that Google Japan was buying links to promote Google widget. Their penalty? Their PageRank was downgraded from PR9 to PR5 for a period of 11 months.

These types of SEO strategies can result in penalties, including PageRank downgrades, removal from the first page, and even total deindexing of the website, depending on the severity of the offense.

By reviewing these patents, you now have crucial information to avoid a Google penalty. Of course, some SEO professionals are experts at reading between the lines of patents and other Google recommendations. Thus, their black hat practices continue to work today. But for how long?

The risks incurred in case of algorithm update or manual data revision are, in my opinion, too significant to continue acting this way. Moreover, with the advent of voice search and the rise of long-tail queries, keyword stuffing and cloaking are definitely actions that are no longer worth it.

Of course, making your SEO strategy profitable requires time, dedication, and investment (in time and money). And especially if your field is highly competitive! However, there are now white hat techniques and user-friendly practices that allow you to make a place for yourself in the SERP:

- respect Google's recommendations (especially the EAT criteria),

- hire web copywriters trained in SEO,

- get support from SEO consultants who don't promise you mountains and wonders in a few weeks.

🙏 Sources used to write this article

Need to go further?

If you need to delve deeper into the topic, the editorial team recommends the following 5 contents: